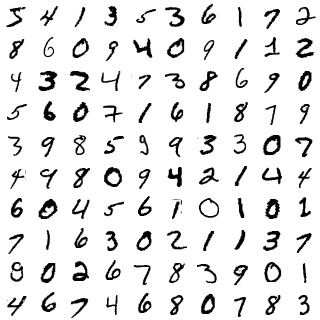

average categorize some mnist digits

So, it's taken some thinking, and computing time, but I have made a start on digit recognition. Though to be honest I'm not 100% convinced this approach will work, but it might. So worth exploring. The outline of my method is. Get some images from the MNIST set. Convert them to images. Convert those to k*k image ngrams (a kind of 2D version of the standard ngram idea). Then those into superpositions. Apply my average-categorize to that, then finally convert back to images, and tile the result. Yeah, my process needs optimizing, but it will do for now, while in the exploratory phase.

Now, the details, and then the results:

-- map MNIST idx format to csv, with tweaked code from here:

$ ./process_mnist.py

-- map first 100 mnist image-data to images:

$ ./mnist-data-to-images.py 100

-- map images to 10*10 image-ngrams sw file:

$ ./create-image-sw.py 10 work-on-handwritten-digits/images/mnist-image-*.bmp

-- rename sw file to something more specific:

$ mv image-ngram-superpositions-10.sw mnist-100--image-ngram-superpositions-10.sw

-- average categorize it (in this case with threshold t = 0.8):

$ ./the_semantic_db_console.py

sa: load mnist-100--image-ngram-superpositions-10.sw

sa: average-categorize[layer-0,0.8,phi,layer-1]

sa: save mnist-100--save-average-categorize--0_8.sw

-- convert these back to images (currently the source sw file is hard-coded into this script):

$ ./create-sw-images.py

-- tile the results:

$ cd work-on-handwritten-digits/

$ mv average-categorize-images mnist-100--average-categorize-images--threshold-0_8

$ ls -1tr mnist-100--average-categorize-images--threshold-0_8/* > image-list.txt

$ montage -geometry +2+2 @image-list.txt mnist-100--average-categorize-0_8.jpg

where montage is part of ImageMagick.

Now the results, starting with the 100 images we used as the training set (which resulted in 32,400 layer-0 superpositions/image-ngrams):

Average categorize with t = 0.8, with a run-time of 1 1/2 days, and resulted in 1,238 image ngrams:

Average categorize with t = 0.7, with a run-time of 6 1/2 hours, and resulted in 205 image ngrams:

Average categorize with t = 0.65, with a run-time of 3 hours 20 minutes, and resulted in 103 image ngrams:

Average categorize with t = 0.6, with a run-time of 1 hours 50 minutes, and resulted in 58 image ngrams:

Average categorize with t = 0.5, with a run-time of 43 minutes, and resulted in 18 image ngrams:

Now we have to see if we can do anything useful with these average-categorize image ngrams. The hope is to map test images to linear combinations of the image-ngrams, which we can represent in superposition form (details later), and then do digit classification on that using similar[op]. Though I'm pretty sure that is not sufficient processing to be successful, but it is a starting point. Eventually I'd like to use several layers of average-categorize, which I'm hoping will be more powerful.

Home

previous: introducing network k similarity

next: new tool phi transform

updated: 19/12/2016

by Garry Morrison

email: garry -at- semantic-db.org