spreading activation in bko

Another brief comparison. This time looking at spreading activation, and encoding that in BKO. I'm not going to give it much thought. Just quick and dirty.

This image is on the wikipage:

And has this caption:

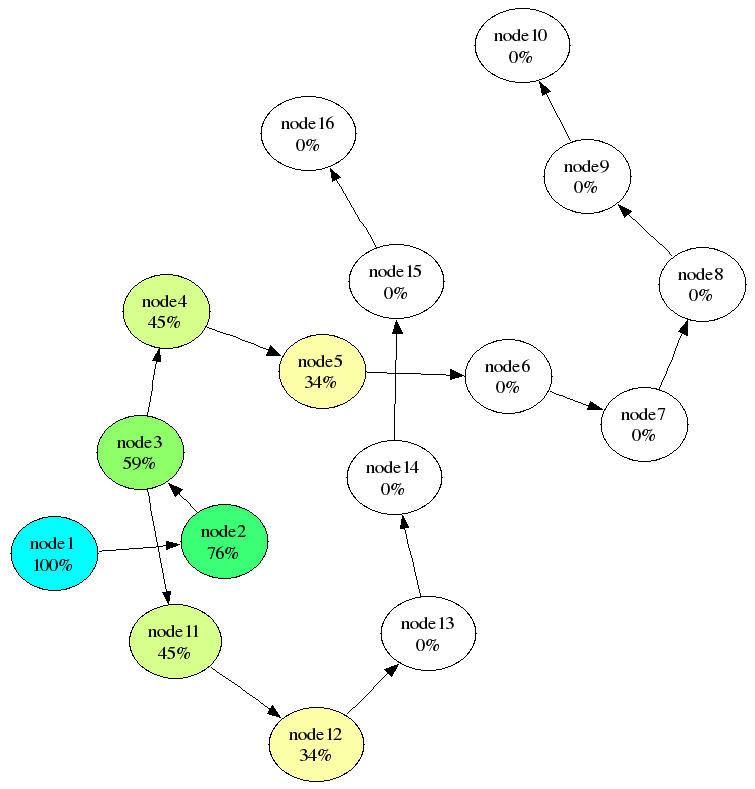

In this example, spreading activation originated at node 1 which has an initial activation value of 1.0 (100%). Each link has the same weight value of 0.9. The decay factor was 0.85. Four cycles of spreading activation have occurred. Color hue and saturation indicate different activation values.

The key things to note are link strength is 0.9 and decay factor is 0.85. This produces this BKO:

op |node 1> => 0.9|node 2>

op |node 2> => 0.9|node 3>

op |node 3> => 0.9|node 4> + 0.9|node 11>

op |node 11> => 0.9|node 12>

op |node 12> => 0.9|node 13>

op |node 13> => 0.9|node 14>

op |node 14> => 0.9|node 15>

op |node 15> => 0.9|node 16>

op |node 4> => 0.9|node 5>

op |node 5> => 0.9|node 6>

op |node 6> => 0.9|node 7>

op |node 7> => 0.9|node 8>

op |node 8> => 0.9|node 9>

op |node 9> => 0.9|node 10>

decay-factor |*> #=> 0.85 |_self>

Now, let's "spread our activation":

sa: decay-factor op 100 |node 1>

76.5|node 2>

sa: decay-factor op decay-factor op 100 |node 1>

58.523|node 3>

sa: decay-factor op decay-factor op decay-factor op 100 |node 1>

44.77|node 4> + 44.77|node 11>

sa: decay-factor op decay-factor op decay-factor op decay-factor op 100 |node 1>

34.249|node 5> + 34.249|node 12>

Which nicely reproduces the percentages in the above graph.

Now, we can tidy it to this:

-- define a merged decay-factor and op operator:

sa: decay-factor-op |*> #=> decay-factor op |_self>

-- short cut for: (1 + decay-factor-op + decay-factor-op^2 + decay-factor-op^3 + decay-factor-op^4) 100 |node 1>

sa: exp[decay-factor-op,4] 100 |node 1>

100|node 1> + 76.5|node 2> + 58.523|node 3> + 44.77|node 4> + 44.77|node 11> + 34.249|node 5> + 34.249|node 12>

That is enough for now.

Update: Now with the magic of tables, show the activation spread through the entire network:

sa: decay-factor-op |*> #=> decay-factor op |_self>

sa: table[node,coeff] exp-max[decay-factor-op] 100 |node 1>

+---------+--------+

| node | coeff |

+---------+--------+

| node 1 | 100 |

| node 2 | 76.5 |

| node 3 | 58.523 |

| node 4 | 44.77 |

| node 11 | 44.77 |

| node 5 | 34.249 |

| node 12 | 34.249 |

| node 6 | 26.2 |

| node 13 | 26.2 |

| node 7 | 20.043 |

| node 14 | 20.043 |

| node 8 | 15.333 |

| node 15 | 15.333 |

| node 9 | 11.73 |

| node 16 | 11.73 |

| node 10 | 8.973 |

+---------+--------+

Now a tweak. The signal drops to zero if it is below 20% activation:

sa: table[node,coeff] threshold-filter[20] exp-max[decay-factor-op] 100 |node 1>

+---------+--------+

| node | coeff |

+---------+--------+

| node 1 | 100 |

| node 2 | 76.5 |

| node 3 | 58.523 |

| node 4 | 44.77 |

| node 11 | 44.77 |

| node 5 | 34.249 |

| node 12 | 34.249 |

| node 6 | 26.2 |

| node 13 | 26.2 |

| node 7 | 20.043 |

| node 14 | 20.043 |

| node 8 | 0 |

| node 15 | 0 |

| node 9 | 0 |

| node 16 | 0 |

| node 10 | 0 |

+---------+--------+

Anyway, all simple enough.

Update: at this stage we have more than proved we can represent most things in BKO (sp-learn rules are currently an exception, waiting for me to finish the parsely implementation of extract-compound-superposition). We are now at the stage of finding ways to learn knowledge with minimal input from a human.

Home

previous: semantic networks in bko

next: softmax and log

updated: 19/12/2016

by Garry Morrison

email: garry -at- semantic-db.org